Recent advancements in generative models have highlighted the crucial role of

image tokenization in the efficient synthesis of high-resolution images. Tokenization, which transforms images into latent representations, reduces computational

demands compared to directly processing pixels and enhances the effectiveness

and efficiency of the generation process. Prior methods, such as VQGAN, typically

utilize 2D latent grids with fixed downsampling factors. However, these 2D tokenizations face challenges in managing the inherent redundancies present in images,

where adjacent regions frequently display similarities. To overcome this issue,

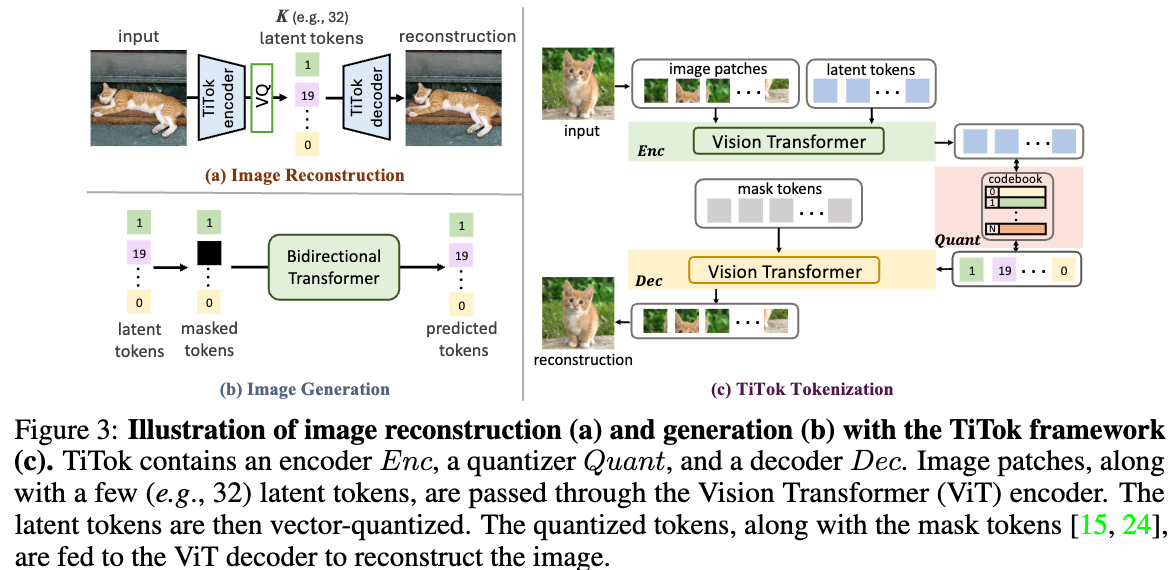

we introduce Transformer-based 1-Dimensional Tokenizer (TiTok), an innovative

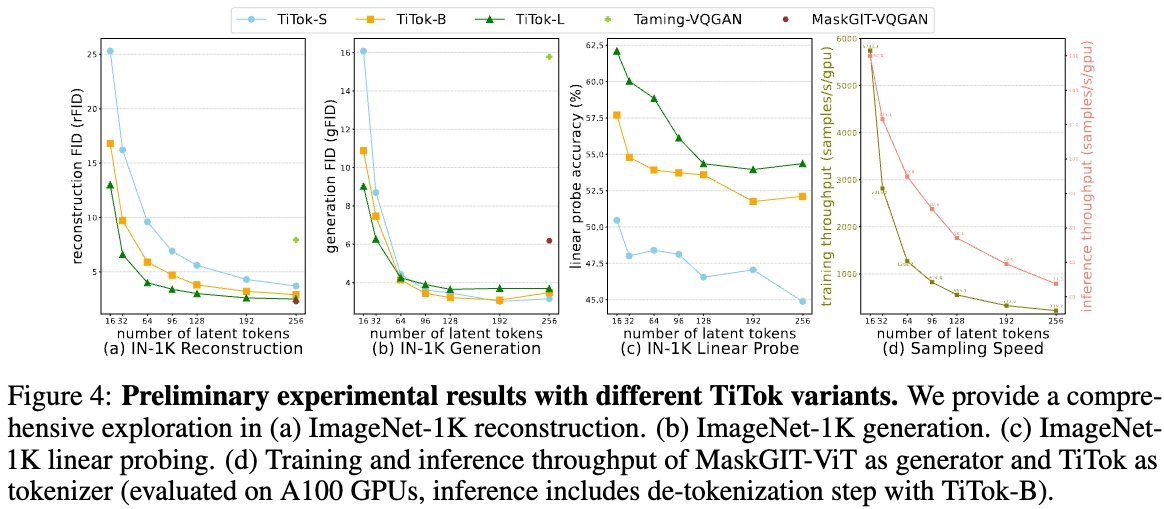

approach that tokenizes images into 1D latent sequences. TiTok provides a more

compact latent representation, yielding substantially more efficient and effective

representations than conventional techniques. For example, a 256 × 256 × 3 image

can be reduced to just 32 discrete tokens, a significant reduction from the 256 or

1024 tokens obtained by prior methods. Despite its compact nature, TiTok achieves

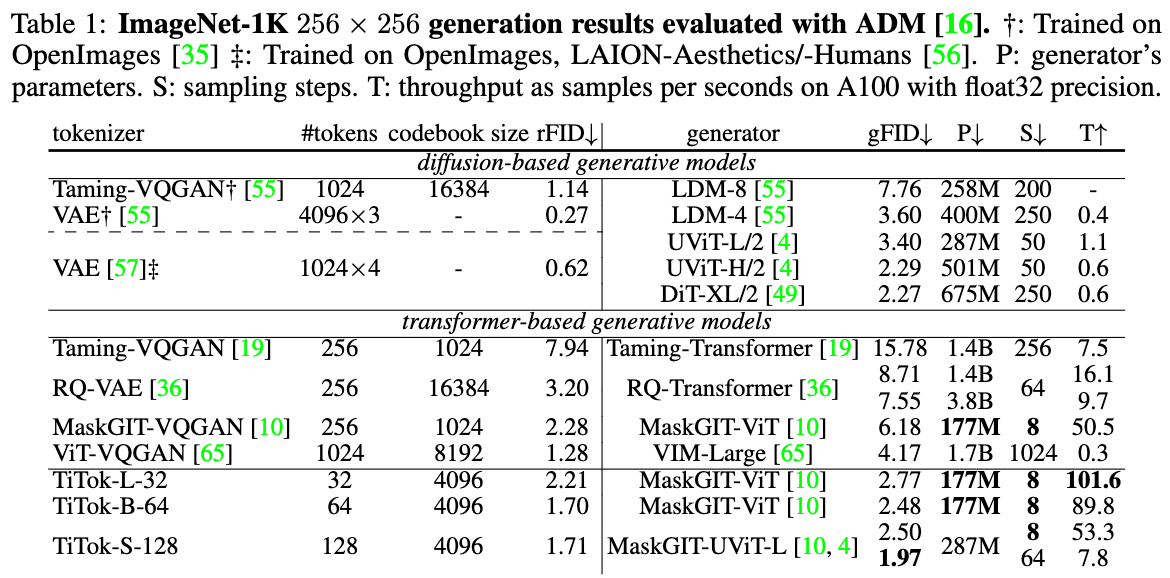

competitive performance to state-of-the-art approaches. Specifically, using the

same generator framework, TiTok attains 1.97 gFID, outperforming MaskGIT

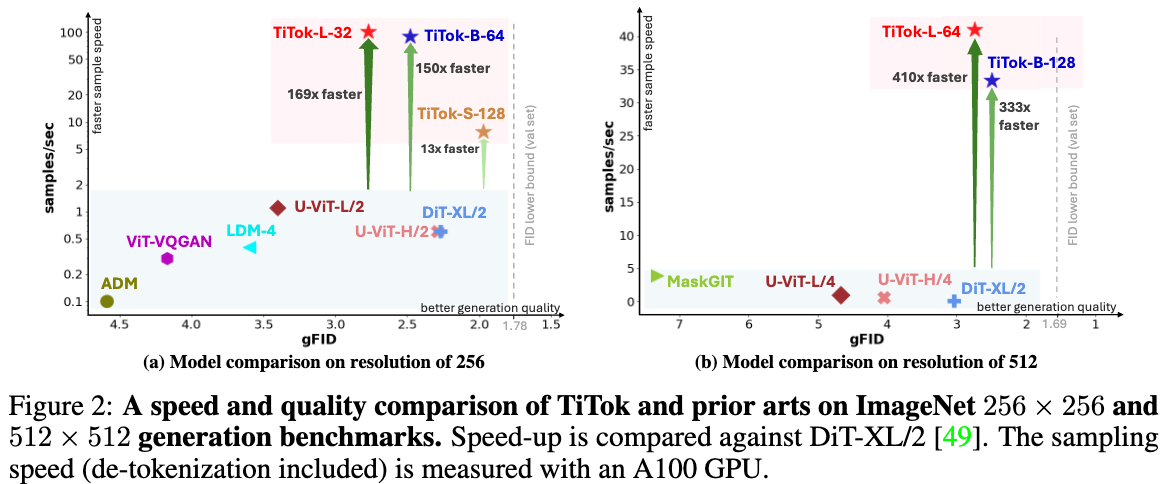

baseline significantly by 4.21 at ImageNet 256 × 256 benchmark. The advantages

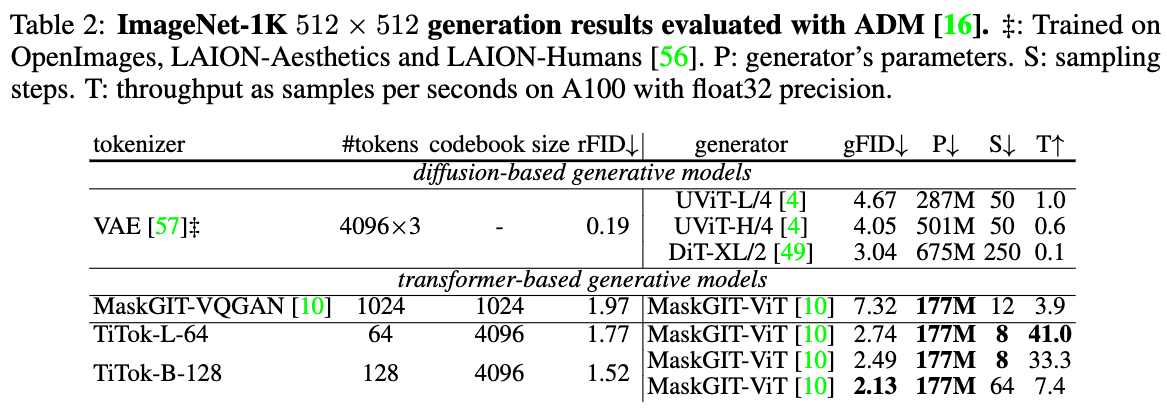

of TiTok become even more significant when it comes to higher resolution. At

ImageNet 512 × 512 benchmark, TiTok not only outperforms state-of-the-art diffusion model DiT-XL/2 (gFID 2.74 vs. 3.04), but also reduces the image tokens

by 64 × , leading to 410 × faster generation process. Our best-performing variant

can significantly surpasses DiT-XL/2 (gFID 2.13 vs. 3.04) while still generating

high-quality samples 74 × faster.